|

| Image of a single container being transported by OiMax |

Before the transition to Docker containers started at eLife, a single service deployment pipeline would pick up the source code repository and deploy it to one or more virtual machines on AWS (EC2 instances booted from a standard AMI). As the pipeline went across the environments, it repeated the same steps over and over in testing, staging and production. This is the story of the journey from a pipeline based on source code for every stage, to a pipeline deploying an immutable container image; the goal pursued here being the time savings and the reduced failure rate.

The end point is seen as an intermediate step before getting to containers deployed into an orchestrator, as our infrastructure wasn't ready to accept a Kubernetes cluster when we started the transition, nor Kubernetes itself was trusted yet for stateful, old-school workloads such as running a PHP applications that writes state on the filesystem. Achieving containers-over-EC2 allows developers to target Docker as the deployment platform, without realizing yet cost savings related to the bin packing of those containers onto anonymous VMs.

Starting state

A typical microservice for our team would consist of a Python or PHP codebase that can be deployed onto a usually tiny EC2 instance, or onto more than one if user-facing. Additional resources that are usually not really involved in the deployment process are created out of band (with

Infrastructure as Code) for this service, like a relational database (outsourced to RDS), a load balancer, DNS entries and similar cloud resources.

Every environment replicates this setup, whether it is a

ci environment for testing the service in isolation, or an

end2end one for more large-scale testing, or even a sandbox for exploratory, manual testing. All these environments try to mimic the

prod one, especially

end2end which is supposed to be a perfect copy on fewer resources.

A deployment pipeline has to go through environments as a new release is promoted from

ci to

end2end and

prod. The amount of work that has to be repeated to deploy from source on each of the instances is sizable however:

- ensure the PHP/Python interpreter is correctly setup and all extensions are installed

- checkout the repository, which hopefully isn't too large

- run scripts if some files need to be generated (from CSS to JS artifacts and anything similar)

- installing or updating the build-time dependencies for these tasks, such as a headless browser to generate critical CSS

- run database migrations, if needed

- import fixture data, if needed

- run or update stub services to fill in dependencies, if needed (in testing environments)

- run or update real sidecar services such as a queue broker or a local database, if present

These ever-expanding sequence of operations for each stage can be optimized, but in the end the best choice is not to repeat work that only needs to be performed once per release.

There is also a concern about the end result of a deploy being different across environments. This difference could be in state, such as a JS asset served to real users being different from what you tested; but also in outcome, as a process that can run perfectly in testing may run into a APT repository outage when in production, failing your deploy halfway through, only on one of the nodes. Not repeating operations leads not just to time savings but to a simpler system in which fewer operations can fail just because there are fewer of them in general.

Setting a vision

I've automated before

builds that generated a set of artifacts from the source code repository and

then deploy that across environments, for example zipping all the PHP or Python code into an archive or in some other sort of package. This approach works well in general, and it is what compiled languages naturally do since they can't get away with recompiling in every environment. However, artifacts do not take into account OS level dependencies like the Python or PHP version with their configuration, along with any other setup outside of the application folder: a tree of directories for the cache, users and groups, deb packages to install.

Container images promise to ship a full operating system directory tree, which will run in any environment only sharing a kernel with its host machine. Seeing

docker build as the natural evolution of

tar -cf ... | bzip2, I set out to port the build processes of the VMs into portable container images per each service. We would then still be deploying these images as the only service on top an EC2 virtual machine, but each deployment stage should just be consisting of pulling one or more images and starting them with a docker-compose configuration. The stated goal was to reduce the time from commit to live, and the variety of failures that can happen along the way.

Image immutability and self-sufficiency

To really save on deployment time, the images being produced for a service must be the same across environments. There are some exceptions like a

ci derivative image that adds testing tools to the base one, but all prod-like environment should get the same artifact; this is not just for reproducibility but primarily for performance.

The approach we took was to also isolate services into their own containers, for example creating two separate

fpm and

nginx images (

wsgi and

nginx for Python); or to use a standard

nginx image where possible. Other specialized testing images like our own

selenium extended image can still be kept separate.

The isolation of images doesn't just make them smaller than a monolith, but provides Docker specific advantages like leveraging independent caching of their layers. If you have a monolith image and you modify your composer.json or package.json file, you're in for a large rebuild. But segregating responsibilities leads instead to only one or two of the application images being rebuilt: never having to reinstall those packages for Selenium debugging. This can also be achieved by embedding various targets (

FROM ... AS ...) into a single Dockerfile, and having docker-compose build one of them at a time with the

build.target option.

When everything that is common across the environments is bundled within them, what remains is configuration in the form of docker-compose.yml and other files:

- which container images should be running and exposing which ports

- which commands and arguments the various images should be passed when they are started

- environment variables to pass to the various containers

- configuration files that can be mounted as volumes

Images would typically have a default configuration file in the right place, or be able to work without one. A docker-compose configuration can then override that default with a custom configuration file, as needed.

One last responsibility of portable Docker images is their definition of a basic

HEALTHCHECK. This means an image has to ship enough basic tooling to, for example, load a

/ping path on its own API and verify a 200 OK response is coming out. In the case of classic containers like PHP FPM or a WSGI Python container, this implies some tooling will be embedded into the image to talk to the main process through that protocol rather than through HTTP.

It's a pity to reinvent the lifecycle management of the container (being started, then healthy or unhealthy after a series of probes), whereas we can define a simple command that both docker-compose or actual orchestrators like Kubernetes can execute to detect the readiness of the new containers after deploy. I used to ship smoke tests with the configuration files to use, but these have largely been replaced by polling for an health status on the container itself.

Image size

Multi-stage builds are certainly the tool of choice to keep images small: perform expensive work in separate stages, and whenever possible only copy files into the final stage rather than executing commands that use the filesystem and bloat the image with their leftover files.

A consolidated RUN command is also a common trick to bundle together different processes like

apt-get update and

rm /var/lib/apt/lists/* so that no intermediate layers are produced, and temporary files can be deleted before a snapshot is taken.

To find out where this optimization is needed however, some introspection is needed. You can run

docker inspect over a locally built image to check its

Size field and then

docker history to see the various layers. Large layers are hopefully being shared between one image and the next if you are deploying to the same server. Hence it pays to verify that if the image is big, most of its size should come from the ancestor layers and they should seldom change.

A final warning about sizes is related to images with many small files, like node_modules/ contents. These images may exhaust the inodes of the host filesystem well before they fill up the available space. This doesn't happen when deploying source code to the host directly as files can be overwritten, but every new version of a Docker image being deployed can easily result in a full copy of folders with many small files. Docker's

prune commands often help by targeting various instance of containers, images and other leftovers, whereas

df -i (as opposed to

df -h) diagnoses inodes exhaustion.

Underlying nodes

Shipping most of the stack in a Docker image makes it easier to change it as it's part of an immutable artifact that can be completely replaced rather than a stateful filesystem that needs backward compatibility and careful evolution. For example, you can just switch to a new APT repository rather than transition from one to another by removing the old one; only install new packages rather than having to remove the older ones.

The host VMs become leaner and lose responsibilities, becoming easier to test and less variable; you could almost say all they have to run is a Docker daemon and very generic system software like syslog, but nothing application-specific apart from container dependencies such as providing a folder for config files to live on. Whatever Infrastructure as Code recipes you have in place for building these VMs, they will become easier and faster to test, with the side-effect of also becoming easier to replace, scale out, or retire.

An interesting side effect is that most of the first stages of projects pipelines lost the need for a specific CI instance where to deploy. In a staging environment, you actually need to replicate a configuration similar to production like using a real database; but in the first phases, where the project is tested in isolation, the test suite can effectively run on a generic Jenkins node that works for all projects. I wouldn't run multiple builds at the same time on such a node as they may have conflicts on host ports (everyone likes to listen on

localhost:8080), but as long as the project cleans up after failure with

docker-compose down -v or similar, a new build of a wholly different project can be run with practically no interaction.

Transition stages

After all this care in producing good images and cleaning up the underlying nodes, we can look at the stages in which a migration can be performed.

A first rough breakdown of the complete migration of a service can be aligned on environment boundaries:

- use containers to run tests in CI (xUnit tools, Cucumber, static checking)

- use containers to run locally (e.g. mounting volumes for direct feedback)

- roll out to one or more staging environments

- roll out to production

This is the path of least resistance, and correctly pushes risk first to less important environments (testing) and only later to staging and production; hence you are free to experiment and break things without fear, acquiring knowledge of the container stack for later on. I think it runs the risk of leaving some projects halfway, where the testing stages have been ported but production and staging still run with the host-checks-out-source-code approach.

A different way to break this down is perform the environment split by considering the single processes involved. For example, consider an application with a server listening on some port, a cli interface and a long-running process such as a queue worker:

- start building an image and pulling it on each enviroment, from CI to production

- try running CLI commands through the image rather than the host

- run the queue worker to the image rather than the host

- stop old queue worker

- run the server, using a different port

- switch the upper layer (nginx, a load balancer, ...) to use the new container-based server

- stop old server

- remove source code from the host

Each of these slices can go through all the environments as before. You will be hitting production sooner, which means Docker surprises will propagate there (it's still not as stable as Apache or nginx); but issues that can only be triggered in production will happen on a smaller part of your application, rather than as a big bang of the first production deploy of these container images.

If you are using any dummy project, stub or simulator, they are also good candidates for being switched to a container-based approach first. They usually won't get to production however, as they will only be in use in CI and perhaps some of the other testing environments.

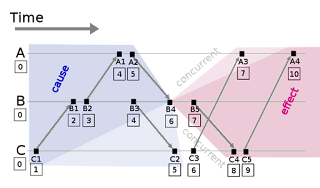

You can also see how this piece-wise approach lets you run both versions of a component in parallel, move between one and the other via configuration and finally remove the older approach when you are confident you don't need to roll back. At the start using a Docker image doesn't seem like a huge change, but sometimes you end up with 50 modified files in your Infrastructure as Code repository, and 3-4 unexpected problems to get them through all the environments. This is essentially

Branch by Abstraction applied to Infrastructure as Code: a very good idea for incremental migrations applied to an area that normally needs to move at a slower pace than application code.